Hey folks, long time no see. Life has been pretty busy in the past few months, hasn't it? In order to make life easier for Math students across the UK, several students at Imperial, myself included, ran the 4th edition of the \(\href{https://icmathscomp.org/}{Imperial \ Mathematics \ Competition}\). This year, we went for an online format, kinda like the IMC last summer. Overall, we had an impressive amount of people attending the first round; over 120 students participated at the first round, the top 50 qualifying for the second one.

All problems were exciting and fun, the organizing team was excellent, and it was great working with likeminded individuals. In this post, I'll go over one of the two problems that I proposed: the 3rd problem from the second round. I thought of this problem while working on some integral inequalities. Here it is:

Let \(f,g,h\) be continuous functions and \(X\) a random variable such that \(E(g(X)h(X))=0\) and \(E(g(X)^2) \neq 0 \neq E(h(X)^2)\). Prove that \(E(f^2(X))-\frac{E^2(f(X)h(X))}{E(h^2(X))} \geq \frac{E^2(f(X)g(X))}{E(g^2(X))}\).

Solution: What do expected values and integrals have in common? Well, sure, expected values are integrals, but there is another thing; both can be written as inner products. For instance, \(<f , g> =\int_{0}^{2\pi} f(x) g(x) dx\) is an inner product. Similarly, one can think of \(E(XY)\) as an inner product between two vectors, which in this case are random variables, or functions thereof. Thus, this problem is actually linear algebra in disguise!

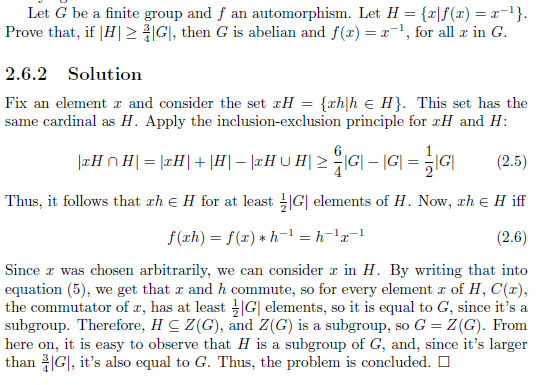

Let's rewrite it using only linear algebra. We now have to prove that, if \(f, g, h\) are vectors on a vector space over \(\mathbb{R}\) such that \(<g, h>=0\), \(<g,g> \neq 0 \neq <h,h>\), the following inequality holds:

\(\begin{equation} ||f||^2 \geq \frac{(f,g)^2}{||g||^2} + \frac{(f,h)^2}{||h||^2} \end{equation} \)

For any \(\lambda \in \mathbb{R}\), we have \(\lambda (f,g)= (g,h+\lambda f) \leq ||g|| \cdot ||h+\lambda f||\), by Cauchy. Squaring, we obtain that \(\lambda^2 (f,g)^2 \leq ||g||^2 \cdot ||h+\lambda f||^2\). Now dividing by \(||g||^2 \neq 0\) yields \[\lambda^2 \frac{(f,g)^2}{||g||^2} \leq ||h+\lambda f||^2 = ||h||^2 + 2 \lambda (f,h) + \lambda^2 ||f||^2.\] We can take everything on the right side, to obtain that

\(\begin{equation} \lambda^2 (||f||^2 - \frac{(f,g)^2}{||g||^2}) + 2 \lambda (f,h) + ||h||^2 \geq 0,\end{equation}\)

for all \(\lambda \in \mathbb R\). We consider this a quadratic equation in \(\lambda\), and use the fact that, for the equation to always be positive, we need the discriminant to be smaller or equal to 0. We conclude that \(||f||^2-\frac{(f,h)^2}{||h||^2} \geq \frac{(f,g)^2}{||g||^2}\), which solves the problem.

This was fun, right? The inequality stated works for any inner product space; I could have written integrals, or the dot product, or anything that came to mind. Thus, the solution is as general as possible. You know who else likes generalisations? My old buddy Lean.

I wrote inner products and the Cauchy inequality from scratch; they were already written in Lean's library in a much more efficient way than I could have come up with, but I felt that proving all that I needed myself would be a better exercise. \(\href{https://gist.github.com/Soccolo/0ca77b63d90556dc94897e65845439a8}{Here}\) is the code.

In the beginning, I defined what an inner product is; you may observe that it is the real inner product, as I haven't thought about making the inequality more general. I also proved some properties of inner products; the function \(f\) is a general inner product, so any result about it is a result about the inner products I defined. Cauchy-Schwarz was pretty interesting to prove; I am certain that there is a way easier way of proving it, but I am still a Lean novice. After Cauchy-Schwarz, we delve immediately into the problem at hand; we prove some lemmas and small results, and compile them together in a neat way to get a proof.

Every 'have ...' is some sort of lemma inside a proof. The logic is the classical 'divide and conquer' idea; I split the problem into pieces, then glued them together to get a result. Lean treats each such lemma inside the proof as a proof itself; when you use a result in Lean, you don't quote the result, you actually use a proof of the result. Thus, through each 'have ...', I got lots of results that I used in order to prove the Cauchy inequality and the contest inequality. I am not the best person to explain how Lean works, so why don't you check out the community's \( \href {https://leanprover-community.github.io/}{website}\)?

It's a fun time to be alive if you're a Mathematician. Lean 4 has been released and it's still being worked on, the Maths community is more intertwined than ever, the quantum world is unravelling before our eyes, big data is beginning to play a huge role in the world's affairs. Tune in next time for another dive into what Maths has to offer, or something completely random!

Cris.