I'm back to the usual content, everyone! Well, not really usual, since this problem is more of a puzzle than a problem, but you get the point. No more psychology articles for a while. Anyways, this puzzle comes from the Moscow Maths Olympiad (or the Mexican Maths Olympiad; I don't really know what MMO stands for when it comes to Maths) from 1995, and it goes something like this:

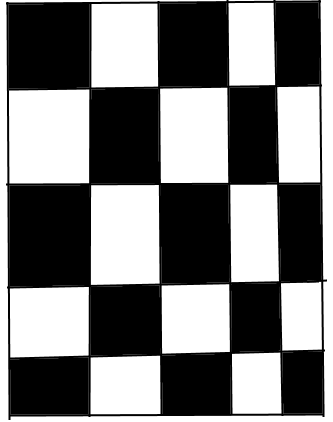

Suppose you have 4 congruent right-angled triangles. After each step, we cut one of the triangles along its height. Prove that, after any number of steps, there are still at least 2 congruent triangles.

Solution:

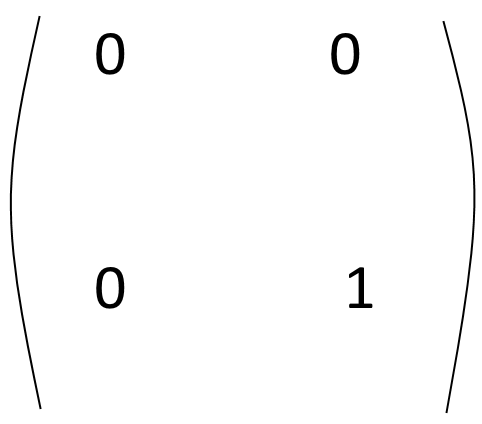

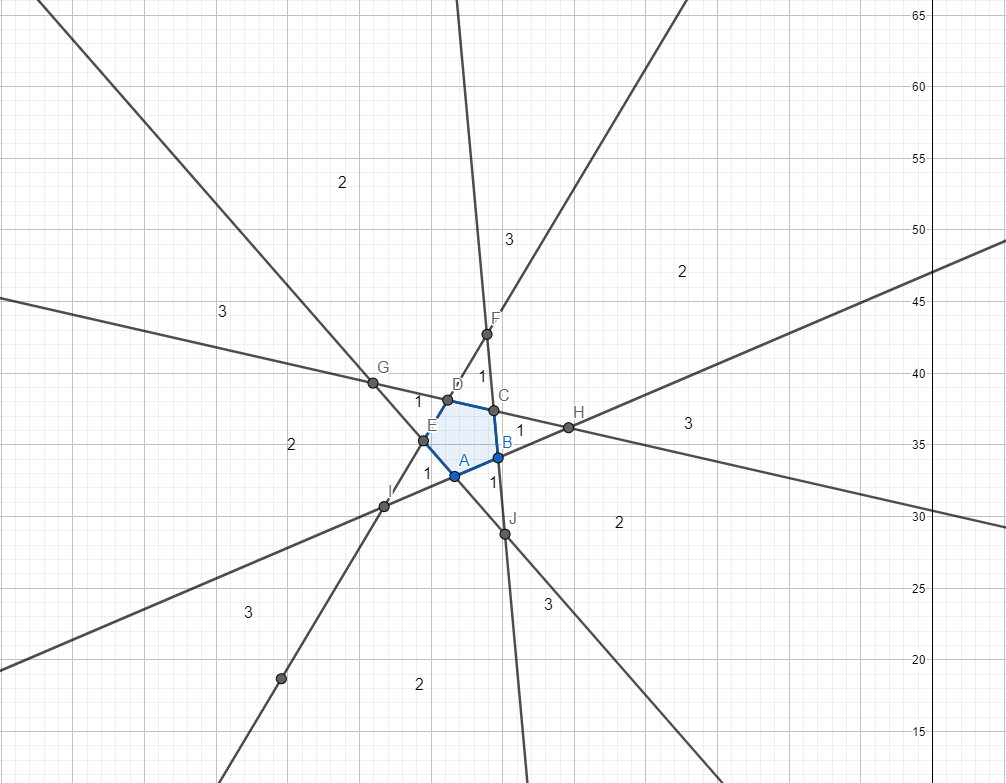

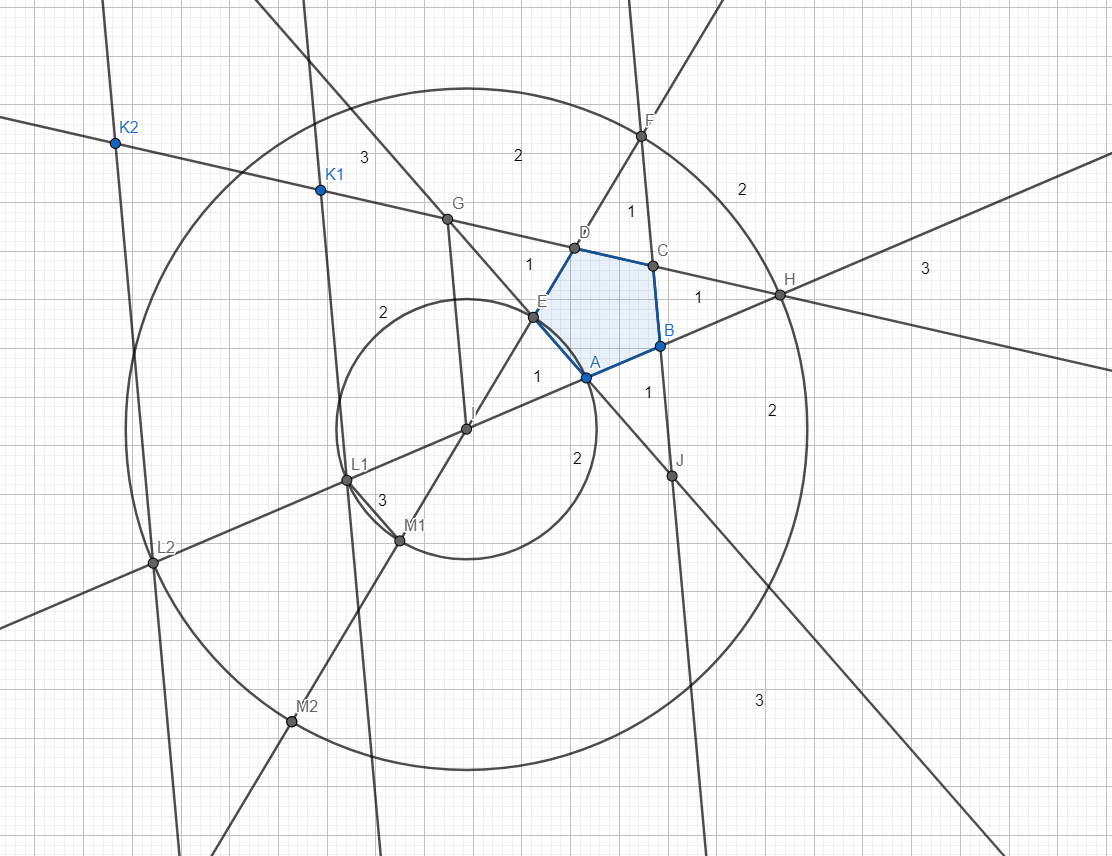

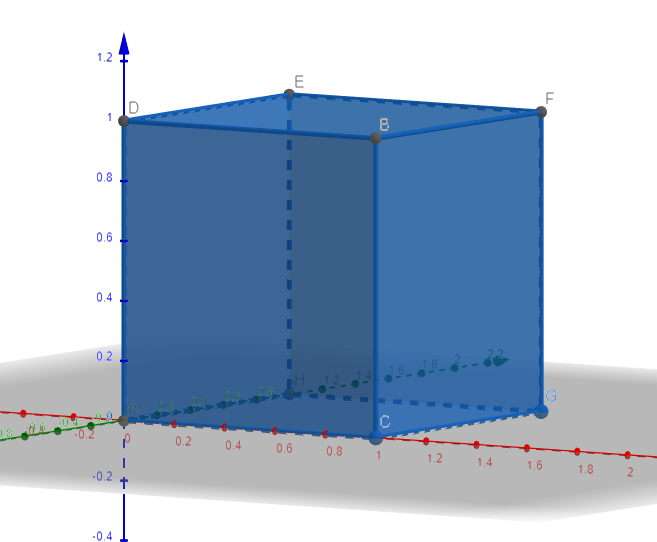

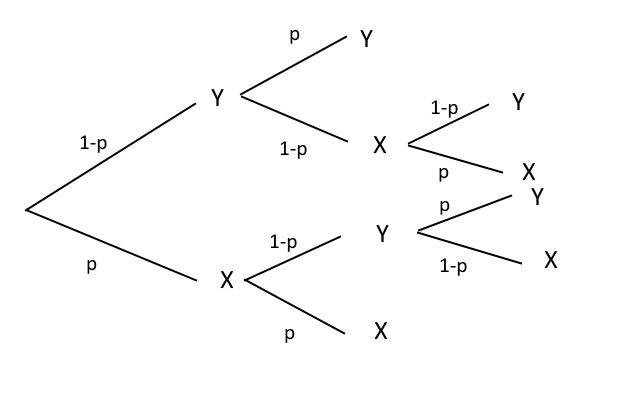

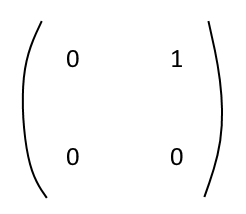

Let the division of the longer leg of the triangle over the smaller leg be equal to \(x\). Thus, when we cut a triangle along the height, we get two smaller triangles with areas proportional to \(x\) and \(1-x\). Let us presume that the initial area of the triangles is 1. At the first step, we have 4 triangles, which have \(x\) and \(1-x\) 0 times in the formula of their areas, so we write that

$$S(0) = (0,0) (0,0) (0,0) (0,0).$$

After the first step, one triangle is split in two parts, one of area \(x\) and one of area \(1-x\), so

$$S(1) = (1,0) (0,1) (0,0) (0,0) (0,0)$$ because \(x\) and \(1-x\) appear only once in the formula of the area of two triangles. Continuing this, we get that, at the p-th step,

$$S(p) = (m_1, n_1) (m_2, n_2) ... (m_{p+4}, n_{p+4})$$

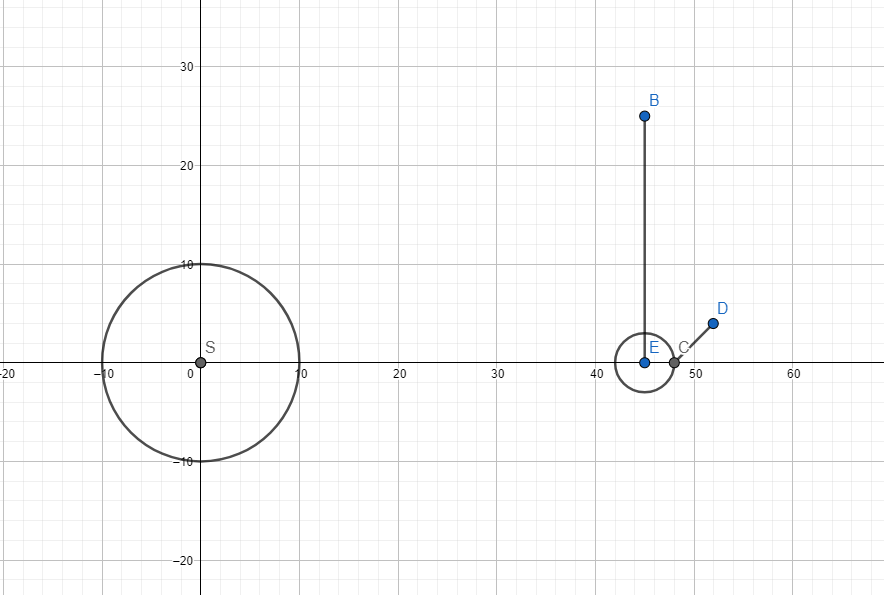

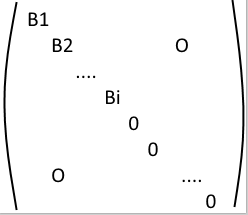

You may see where I'm going with this. We can represent all these pairs as positive lattice points. For two triangles to be congruent, their respective lattice points must be the same. Now, let us presume that, at the start of the game, we put 4 coins of value 1 on the lattice point (0,0). After the first step, we move half a coin on (1,0) and half a coin on (0,1), and, after each step, this process continues. In order for two triangles to be congruent, there must be 2 coins on at least one lattice point. We shall prove that, if there is one coin in all the lattice points, then the sum of values is smaller than 4, which should conclude the proof.

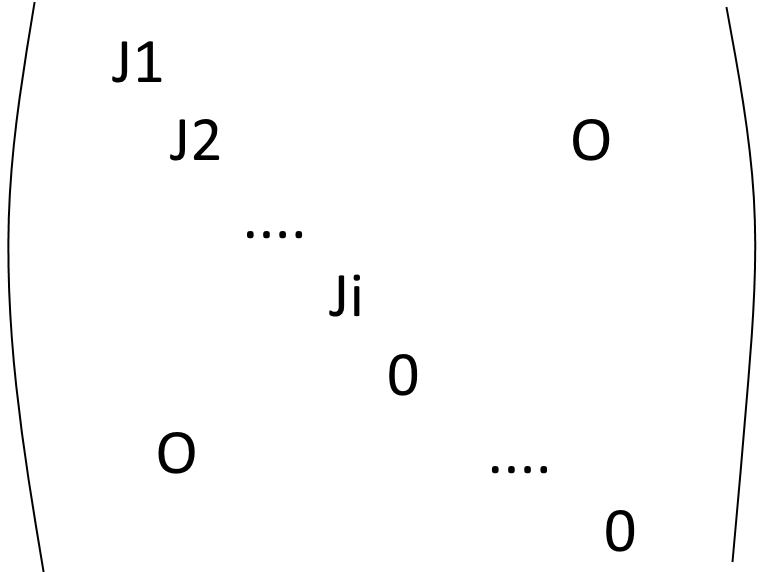

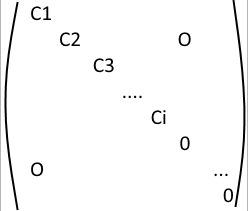

The number of coins on each lattice point is dependent on the position of the lattice point. The coins in the point (m,n) have value \(2^{-m-n}\). Thus, we have to prove that

$$\sum_{n∈ℕ} \frac {n+1}{2^n}<4$$

This series, as shown by Wolfram Alpha (https://www.wolframalpha.com/input/?i=sum+n%3D0...+infinity+(n%2B1)%2F2%5En), converges to 4, and is strictly increasing, so the value of the coins is always smaller than 4, which leads to a contradiction. The series could have been proven to be smaller than 4 by hand, but I preferred using Wolfram Alpha for a quicker result.

Since the sum of values in all positive lattice points is 4, this means that at least a lattice point has 2 coins on it, which concludes the proof.

I found this problem really interesting, mainly because of the ingenuity required to solve it. I found it in Arthur Engel's excellent book 'Problem Solving Strategies' (did you really think I'd go as far as to search the Mexican Maths Olympiad from 1995?) while preparing for my interview at Oxford. Apologies for not posting anything for the past two weeks, I was in Oxford for about a week, and then I had to catch up with my homework, so it has been a pretty rough period. Tune in next time for the first post under the 'Theory' tag, which has been a long time coming!

Cris.

,

,  .

.